Running ARGUS TV in a Virtual Environment

| |

This article would greatly benefit from your contribution. Please help us to extend this ARGUS TV Wiki article. You may want to refer to "Contribute to our Wiki". |

As ARGUS TV is built on a service oriented architecture, you can

already install various of its compontents on separate systems.

This article will help you understand how to install ARGUS TV

in a (or more than one) virtual machine.

There is nothing special to be taken care of for most components of ARGUS TV

when to be installed in a virtual machine, as long as the operating systems meets all

requirements of that component, which of course are the same as in a real system, like

a network connection for example. For details please refer to the article System_Requirements.

The ARGUS TV Recorder component however does require a connection to the real, physical world by accessing the tuner hardware. Thus installing this component in a virtual machine provides an extra challenge, which we want to take on in this article.

Contents |

Purpose / Advantages

There are several advantages in virtualizing your infrastructure. These general reasons apply to a ARGUS TV installation as well.

1. Virtualization provides you with the means of consolidating hardware by moving/installing separate operating systems and their applications into virtual machines and run them on one (at least less than you employed before going virtual ;-) physical systems, by sharing resources thus saving infrastructure space, costs and electricity.

2.

Virtual machines are easier to manage and are faster to deploy.

You can (if your environment is fully set-up) install a new system or revert to

a previous installed (disk-)state with a click of a button.

Also virtualization allows you with ease to build systems just for tests or temporary use only,

to spread your applications accross several machines in order to split workloads

or maybe stabilizing your environment (by avoiding dependencies or cross effects, i.e. when

drivers, libraries or installed packages do interfere when they are on the same system).

Also many specialized systems are easier to maintain than a large multi-purpose installation.

3. Virtualization has long entered enterprises and their infrastructures. Using it at home does not only give you access to the said features but is also a great way to learn and master that technology.

How to start

For home use, the following scenarios for virtualized systems could by an example to start with:

- Run ARGUS TV Recorder / MediaPortal TV Server with your tuners 24x7 (and record to a central NAS)

- Run the NAS in a virtual machine as well

- Run your HTPC in a virtual machine

- Run some specialized systems for

- Central Mail / Groupware for yor family

- Internet Web-Proxy and/or Security Gateway with Full Inspection Firewall, DHCP and/or DNS for your home

- Media streaming, like with DLNA to some clients in your home like SmartPhones etc.

- Provide desktop VMs to your family with central backup & restore and maybe special purposes (i.e. like a developer machine)

- Smart home control

- VoIP-Telephony PBX

In this article, we will only focus on the first example, running ARGUS TV Recorder with your tuners in a VM.

Please note: The task to virtualize your HTPC is a real challenge as of the time being and not for beginners.

Prerequisites

As said before, the software requirements for ARGUS TV will not change when running in a virtual machine. So you need to provide all these just like in a physical installation.

In addition, for virtualizing your setup, you will need some more software and maybe some hardware parts, dependant on what your primary goal is and what your tuner hardware looks like.

Tuners based on USB have the lowest prerequisites. If your tuners are not based on USB but rather are based on PCI/PCIe cards, the prerequisites are the highest.

Virtualization Software

The common and first prerequisite is that you will be in need to obtain, install and run an "additional piece of software". This "additional piece of software" is the virtualization software and there are two types of this as there are two types of virtualization.

Type 1 is called Full Hardware Virtualization and as the name indicates you will need hardware with some special features in order to employ it. The virtualization software for type 1 virtualization is called a hypervisor. The hypervisor is the operating system (more like an embedded operating system, as it is small, stripped down and only focused on providing services to run virtual machines) on the physical host. It will not provide a GUI or services for Users (like in a Desktop machine). You need a separate Desktop system in order to run administration software to configure the virtualization setup. Examples for type 1 virtualization software (hypervisors) are: XEN, KVM and VMWare ESXi

Type 2 is called software virtualization. The virtualization software for type 2 virtualisation is an application, that can be installed in an existing system (in a full operating system), like in a Windows or a Linux Desktop as a physical host. In general, this software will require a larger amount of diskspace to install and more RAM on its own in order to run on the host compared to a type 1 hypervisor. Also performance will be slower than with full hardware virtualization (although most advanced type 2 virtualization software can make use of type 1 hardware virtualisation features if the physical host supports it). Examples for type 2 virtualization software are OpenVZ(Linux), VMWare Server(Linux), VMWare Player(Linux, Win) and Oracle Virtualbox(Linux, Win).

| |

Remark: Check on Hyper-V (for type and USB support) pending This ARGUS TV article or section needs your support. Please help us to create, correct or extend it. You may want to refer to "Contribute to our Wiki". |

Building & Creating Virtualization

Obviously the second prerequisite is based on the required resources in order to "do the job". That includes resources building the base virtualization system (being type 1 or 2) on the physical host and the resources for each virtual machine. Common resources for both types are RAM, CPU, Diskspace and...software licenses.

Here are some sizing guidelines: RAM will not be shared between active virtual machines while CPU load will be shared. RAM and CPU need to be allocated for concurrently running virtual machines only, while diskspace needs to be sized for all virtual machines and their virtual disks, being active or not.

A hypervisor will require much less resources for itself compared to the type 2 virtualization software, where the physical host

will also need to have resources for the host operating system (which includes need for RAM, Disk, CPU, maybe Graphics

and in case of running the virtualization software application in Windows, you will need a Windows license too).

Upon Installation of the hypervisor is the only time where you need physical access to a terminal on the hypervisor machine, the host.

After basic install which includes network setup, all further management will be done remotely.

This, in order to manage the type 1 hypervisor setup and virtual machines, you will need an additional system with management software installed.

For XEN and KVM these are linux/unix based systems and for VMWare ESXi this is a Windows system with a management application, called VMWare VSphere Client, installed.

Management on VMWare ESXi and KVM is done remotely, where the Management Domain needs a network connection to the hypervisor. XEN needs a local install of its Management Domain/System, called the DOM0, on the physical host.

In theory for ESXi and KVM the management domains can be installed as a virtual machine too.

The smallest type 1 setup, including the management domain/system is probably PROXMOX-VE, which provides a debian/linux based KVM hypervisor (and includes OpenVZ too) and a Web-based management tool, so your Management Destop machine just needs a connection and a browser to perform the management tasks.

Access to Tuner Hardware

Back to our initial goal...in order to virtualize ARGUS TV, you will need access to the tuner hardware from within the vitual machine that runs ARGUS TV Recorder.

The last two type 2 applications mentioned above are able to provide access to USB 2.0 compatible devices from the host to the VM. So for a fast start with USB based tuners, a type 2 virtualization with virtualbox or VMWare-Player is all you need.

As said, type 1 virtualization needs hardware support.

Type 1 hardware support comes in a set of special features that must be present in the CPU and/or Motherboard

chipset and also must be supported/enabled by the BIOS of the motherboard in question.

This is available on some models of both, Intel and AMD based, architectures and platforms.

Only one particular feature of the hardware virtualisation feature set will give you the ability to pass through PCI/PCIe hardware to a virtual machine.

On Intel based platforms, that hardware feature is named vt-d. (Note: do not confuse it with the vt-x feature, which is hardware virtualisation on/with the CPU only.

The vt-x feature is lesser/different to the vt-d feature. In general a platform supportiung vt-d inherits vt-x, not the other way around)

Support of that feature is also needed from the hypervisor, which is supported by ESXi, XEN (for x86) and KVM (for linux).

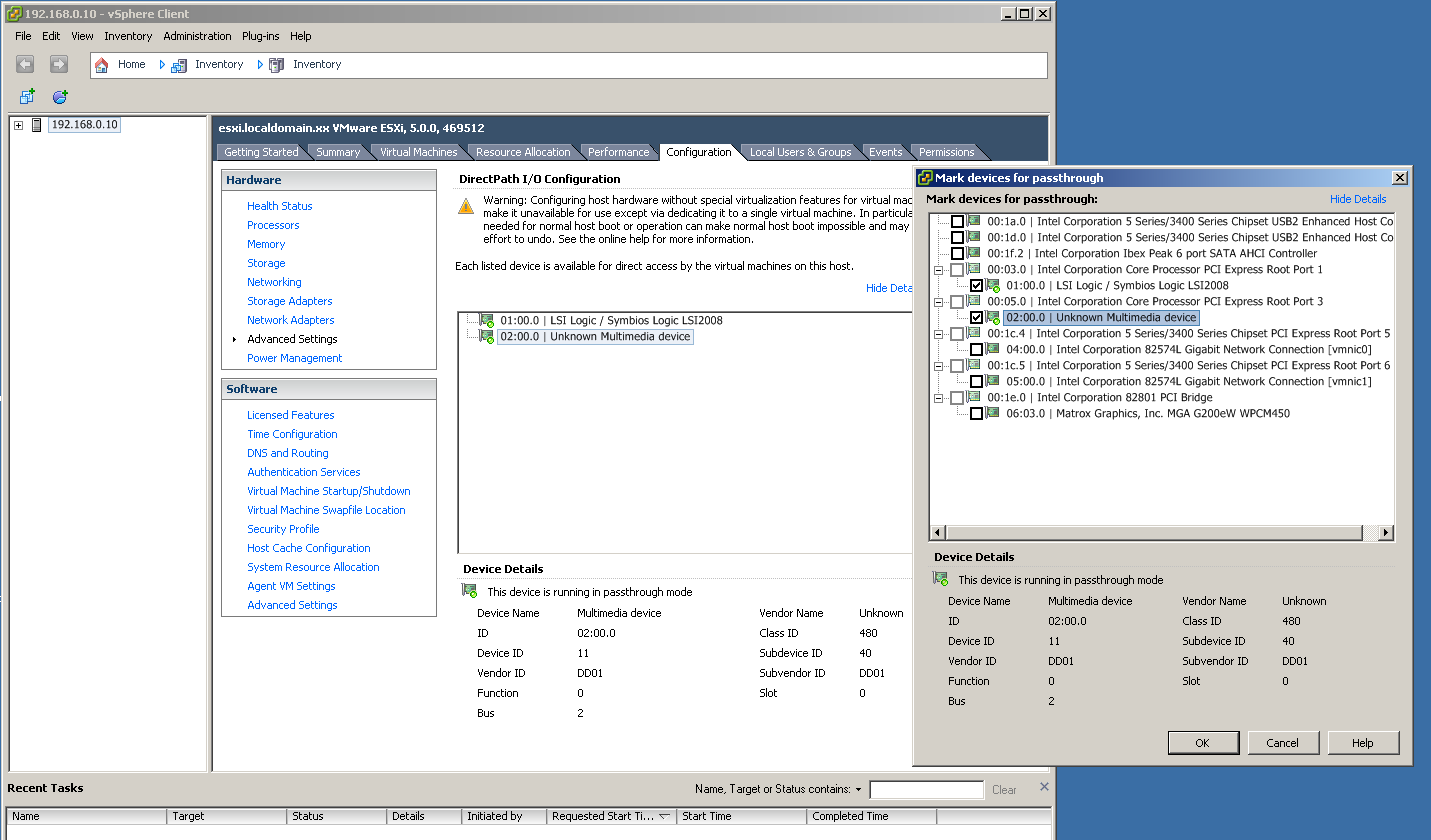

ESXi calls this feature vmdirectpath or DirectPath-I/O while KVM just calls it pcipassthrough.

| |

Remark: Research for AMD features and AMD-V description pending This ARGUS TV article or section needs your support. Please help us to create, correct or extend it. You may want to refer to "Contribute to our Wiki". |

How-To

The most common type 1 hypervisor for x86 hardware architectures is VMWare ESXi. Which is why we will prepare our first howto based on ESXi vmdirectpath with a PCIe tuner card for ARGUS TV Recorder.

ESXi vmdirectpath with a PCIe tuner card

1. Setup/enable vt-x and vt-d in BIOS

2. Install ESXi

2.1 Check if DirectPath-I/O support is detected/enabled by your ESXi install:

3. Install a Windows VM for ARGUS TV Recorder

4. Configure vmdirectpath for the tuner card in ESXi; reboot the host:

5. Enable/add tuner card in ARGUS TV VM configuration in ESXi:

6. Start the Windows VM, install tuner card drivers

7. Install ARGUS TV in Windows VM

Enable USB-port passthrough in ESXi for USB based tuners

Note: you could also have used method from howto (a) and use vmdirectpath to pass the complete USB controller to a VM, since the USB controller will technically be seen as being attached to a PCIe slot of your motherboard

- Install ESXi

- Install a Windows VM for ARGUS TV Recorder

- Configure USB passtrough for USB-Port of tuner card in Windows VM

- Start Windows VM, install tuner card drivers

- Install ARGUS TV in Windows VM

PROXMOX-VE and KVM with PCI-passthrough for PCIe tuner card

- Setup/enable vt-x and vt-d in BIOS

- Install Proxmox

- Install a Windows VM for ARGUS TV Recorder

- Configure pcipassthrough for tuner card

- Enable/add tuner card in ARGUS TV VM configuration in Proxmox

- Start Windows VM, install tuner card drivers

- Install ARGUS TV in Windows VM

VMWare Player or virtualbox with USB passthrough (Windows host)

| |

Remark: Content tbd. This ARGUS TV article or section needs your support. Please help us to create, correct or extend it. You may want to refer to "Contribute to our Wiki". |

XEN and PCI passthrough

| |

Remark: Content tbd. This ARGUS TV article or section needs your support. Please help us to create, correct or extend it. You may want to refer to "Contribute to our Wiki". |

Contraints and Hurdles

The main hurdle is finding the right hardware parts for type 1 virtualisation.

The information is not well documented for all platforms or all parts.

The vt-d feature is mostly present in server class hardware and the BIOS support seems to be the greatest issue/source of uncertainty or failures.

The Intel world has more information / confirmed use than AMD based systems.

If you have more than one card on passthrough, there is a limit of 4 in ESXi on how many can be passed at a time.

Also there are reported side effects, depending on how you populate the cards into slots in your motherboards.

The passthrough feature has been originally developed for use with network cards.

It also works quite well with other card types. Cards from server/enterprise domains seem to work better than cards targeted

at the consumer market. Cards which are directly supported by the hypervisor seem to have a better chance to work.

Tuner cards are not very common, not enterprise grade and implementation of hardware, firmware or drivers might be off

standards (sometimes violation/pushing limits, like with graphics cards) quality wise.

No reports are available that tuner cards are officially supported by the hypervisor (ESXi that is).

HCL (Hardware Compatibility or Whitebox List)

| |

Please help us getting the following list more and more complete to support other users. This ARGUS TV article or section needs your support. Please help us to create, correct or extend it. You may want to refer to "Contribute to our Wiki". |

| Mobo | CPU Make and Model | Tuner Card(s) Make and Model (and Mobo slot# if PCIe) | Physical Setup (Hypervisor, RAM) | VM Setup (RAM, vCPU, VM-NIC model used, Windows version in ARGUS TV-VM) |

|---|---|---|---|---|

| Supermicro X8SIL-F (UP, 1156 socket, Ibex Peak chipset, BIOS V2.0) | Intel XEON L3426 (quad core 1.86GHz, 45W TDP, HT, VT-D) | Digital Devices Octopus CI (PCIe x1) with Two Dual-DVB-S2 cards, inserted into second PCIe x8 slot (counting from CPU socket outwards) | ESXi 5.0, 16GB (2x8GB) Reg-ECC, Note: in order for the NICs to work stable, disable ASPM in BIOS | 5GB, 2 vCPU, vmware3-net, Win7-64 HomePremium with VMware Tools installed, Comskip Post Processing |

| Intel DQ57TM iQ57, SATA2, GLAN | Intel Core i7 860 2.80GHz 2.5GT/s 8MB Box | Conceptronic PCI Adapter 3 Ports Firewire1 with 2 FireDTV DVB-S2 cards connected to it. (nowadays Digital Devices) | ESXi 5.0, 16GB (4x4GB) Non-ECC | 2GB, 1 vCPU, vmware3-net, Win7-32 Pro with VMware Tools installed |

1) Not working with 5.1, 5.5 or 5.5u1 of ESXi.